Warren B. Powell

Everyone wants the simplest possible algorithms, especially in machine learning and reinforcement learning/stochastic optimization. Some examples are:

- Stepsize rules for stochastic gradient algorithms (virtually all have at least one tunable parameter).

- Order-up-to policies for inventory problems.

- Control limit policies:

- Vaccinate people older than \theta.

- Put on a coat when it is colder than \theta degrees).

- Purchase the product if the price is less than \theta.

- Parameterized optimization models (see parametric cost function approximations).

- Most algorithms for derivative-free stochastic optimization.

- Upper confidence bounding policies for bandit problems, such as:

X^\pi(S^n|\theta)=argmax_x({\bar \mu}^n_x + \theta {\bar \sigma}^n_x) (1)

There are endless examples of methods for making decisions which involve tunable parameters. But as I repeat several times in my book, “the price of simplicity is tunable parameters… and tuning is hard!”

This webpage highlights some important issues in the design of algorithms that have been largely overlooked in the research literature dealing with learning/stochastic optimization.

Tuning as an optimization problem

Tuning can be stated as an optimization problem typically using one of two optimization formulations. The most classical form is given by

max_\theta F(\theta) = \mathbb{E}\{F(\theta,W)|S^0\} (2)

If we are tuning the parameters of a policy (such as equation (1)) that has to be evaluated over time, we might write

max_\theta \mathbb{E}\left\{\sum_{t=0}^TC(S_t,X^\pi(S_t|\theta))|S_0\right\} (3)

where S_{t+1}=S^M(S_t,X^\pi(S_t|\theta),W_{t+1}) and where the sequence of random variables W_1, \ldots, W_T might come from a mathematical model (using Monte Carlo simulation) or from history.

The optimization problems in (2) and (3) can be approached using either derivative-based (see chapter 5 in RLSO) or derivative-free (see chapter 7 in RLSO) stochastic search problems. Both of these chapters make the point that any stochastic search algorithm can be posed as a sequential decision problem (which has its own tunable parameters).

The importance of tuning

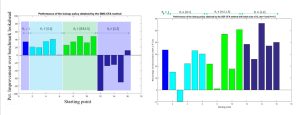

Experimentalists are generally aware of the importance of tuning, but the need to tune and re-tune parameters is easy to overlook, often because it is time consuming, and has to be done manually. The figure below on the left shows the results of parameter tuning when starting the process in one of four ranges (indicated by the four colors). The fourth range (farthest to the right) shows the stochastic optimization algorithm producing poor solutions. The figure on the right shows consistently better performance for all four starting regions, but this was achieved only by re-tuning the stepsize rule used by the stochastic gradient algorithm.

Tuning the parameters in the stepsize rule is easy to overlook. Good experimentalists recognize the need to tune these parameters, but the idea of re-tuning when we change the starting point (for example) is not at all standard practice, and may explain why algorithms can fail.

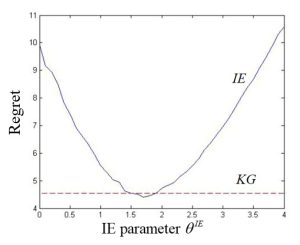

The figure to the right shows the regret (smaller is better) comparing the UCB policy (known as interval estimation) in equation (1) above, to a more sophisticated policy called the knowledge gradient (see section 7.7.2 in RLSO) which is a one-step lookahead. KG does not have any tunable parameters, but requires some moderately sophisticated calculations. The figure demonstrates that the much simpler UCB policy (interval estimation) can outperform KG, but only if the tunable parameter \theta is carefully tuned.

(known as interval estimation) in equation (1) above, to a more sophisticated policy called the knowledge gradient (see section 7.7.2 in RLSO) which is a one-step lookahead. KG does not have any tunable parameters, but requires some moderately sophisticated calculations. The figure demonstrates that the much simpler UCB policy (interval estimation) can outperform KG, but only if the tunable parameter \theta is carefully tuned.

Tuning can be quite difficult. Typically we would perform tuning with a simulator, as was done in the figure above for tuning \theta in the UCB policy in equation (1). However, the simulations are extremely noisy. To obtain the smooth curve in the figure above, we had to run 1000 simulations for each value of \theta. The optimal value also depends on the learning budget. The best value of \theta would change if we have a budget of 50 experiments or 500, as well as being dependent on other problem parameters.

Dependence on the initial state

The optimization problem in equation (2) expresses the dependence of the problem on the initial state S^0, where we assume we have a search algorithm that produces the sequence

S^0,\theta^0,W^1,S^1,\theta^1,W^2, \ldots, S^n,\theta^n,W^{n+1}, \ldots, S^N,\theta^N.In the objective function in (3), we assume we have a temporal process that can be written

S_0,x_0,W_1,S_1,x_1,W_2, \ldots, S_t,x_t,W_{t+1}, \ldots, S_T,x_T.where x_t = X^\pi(S_t|\theta). We then have an outer loop that searches for \theta using equation (2) where

F(\theta,W) = \mathbb{E}\left\{\sum_{t=0}^TC(S_t,X^\pi(S_t|\theta))|S_0\right\},

starting with S_0 = S^0.

So, regardless of whether we are using the objective function (2) or (3), we start with an initial state S^0. Now let \theta^{\pi,N} be the value of \theta after we have run N iterations of an “algorithm” (search policy) \pi. We might write \theta^{\pi,N} as \theta^{*} to indicate that it is in some sense “optimal,” but what this ignores is that the solution \theta^{\pi,N} depends on S^0, which means we really would like to have the function \theta^{\pi,N}(S^0). Of course, no-one ever tries to derive this function, which means that we face the need to re-tune \theta^{\pi,N} any time S^0 changes.

So just what is in S^0? It really covers anything that affects the behavior of the underlying function F(\theta) or the algorithm that searches for \theta^{\pi,N} (including the initial value \theta^{0}).

The dependence on S^0 has been largely overlooked in the algorithmic literature. In fact, writing the dependence of the objectives on S^0 (as is done in (2) and (3)), is not standard in the stochastic optimization literature (note that it is standard in my book RLSO, for precisely this reason).

Types of tunable parameters

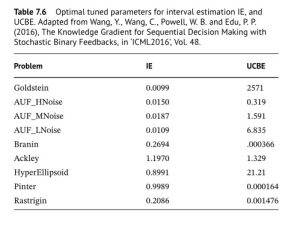

The point needs to be made that not all tunable parameters are made equal. The issue really boils down to scaling. The table to the right shows how two different policies for making decisions: The one under “IE” is given by equation (1)), while the one under “UCBE” uses a different term for capturing uncertainty. The table shows much more variation for the UCBE policy than the IE policy.

issue really boils down to scaling. The table to the right shows how two different policies for making decisions: The one under “IE” is given by equation (1)), while the one under “UCBE” uses a different term for capturing uncertainty. The table shows much more variation for the UCBE policy than the IE policy.

In a nutshell, the more work that goes into designing a function, the less tuning that will be required. A powerful strategy for making sequential decisions is called the “cost function approximation” (see here for a description). CFA policies start by formulating a decision problem as a deterministic optimization model, and then introduces parameters that are designed to make it work better over time, under uncertainty. The initial deterministic optimization problem captures a lot of structure which minimizes scaling as an issue.

Recommendations

Tuning has not received the attention it deserves in the algorithmic research literature. For example, there is an extensive literature for searching over a discrete set of alternatives to learn the best (equation (1) is one example). There are many hundreds of papers proving various regret bounds on these policies, without even mentioning that \theta has to be tuned, which in turn means there is no discussion of how it should be tuned. And as we see in the previous figure, tuning matters.

I believe that every algorithmic paper should be accompanied by a discussion of the requirements for parameter tuning. The discussion should comment on scaling, the level of noise when running simulations, and objective functions for both offline tuning using a simulator (equation (2)) or online tuning in the field (equation (3)). Experimental testing of an algorithm should include experiments from the tuning process.